So, Twitter have attempted to improve performance of their site again.

Interestingly, they’ve decided to back away from the JS-driven !# approach that caused so much fuss last year, and move back to an initial-payload method (i.e. they’re sending the content with the original response, instead of sending back a very lightweight response and loading the content with JavaScript).

Their reasons for doing so all boil down to “We think it makes the site more performant”. They’ve based their measurement of “How fast is my site?” on the time until the first tweet on any page is rendered, and with some (probably fairly involved) testing, they’ve decided that the initial-payload method gives them the fastest “first tweet” time.

The rest of their approach involves grouping their JavaScript into modules, and serving modules in bundles based on the required functionality. This should theoretically allow them to only deliver content (JavaScript, in this case) that they need, and only when they need it.

I have a few issues with the initial-payload approach, from a performance optimisation standpoint (ironic, huh?), and a few more with their reasons for picking this route.

Let’s start right at the beginning, with the first request from a user who’s never been to your site before (or any site at all, for that matter). They’ve got a completely empty cache, so they need to load all your assets. These users actually probably account for between 40 and 60 percent of your traffic.

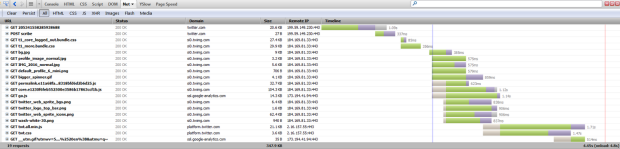

For a blank request to an arbitrary tweet, the user has to load 18 separate items of content, plus perform a POST request to “/scribe”, which seems to be some form of tracking. Interestingly, this post request is the second request fired from the page, and blocks the load of static content, which is obviously fairly bad.

Interestingly, most of the content that’s loaded by the page is not actually rendered in the initial page request; it’s additional content that is needed for functionality that’s not available from the get-go. The response is also not minified, there’s a lot of whitespace in there that could quite easily be removed either on-the-fly (which is admittedly CPU intensive), or at application deploy time.

There we have stumbled upon my issue with the reasons for Twitter taking the initial-payload approach to content delivery: They’re doing it wrong.

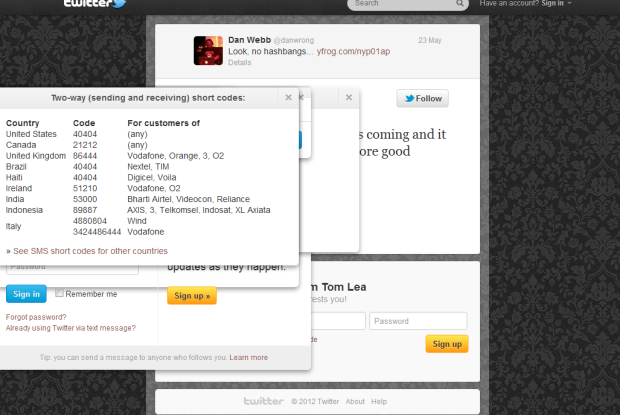

They are sending more than just the initial required content with the payload, in fact they’re sending more than 10 modal popouts that are, obviously, not used apart from in 10 different circumstances. Quite simply, this is additional data that does not need to be transferred to the client, yet.

I’ve un-hidden all the additional content from the linked tweet page in this screenshot, there are around 10 popups. I particularly like the SMS codes one, I wonder what percentage of Twitter users have ever seen that?

The worst thing is, if someone comes onto the new Twitter page without JavaScript enabled, they going to get a payload that includes a bunch of modal popouts that they cannot use without a postback and a new payload. So, for people who have JS enabled, they’re getting data in the initial payload that could be loaded (and cached) on the fly after the page is done rendering; for people without JS enabled they’re getting data in the initial payload that they simply can’t make use of.

How would I handle this situation, in an ideal world?

Well, first I’d ditch the arbitrary “first-tweet” metric. If they’re really worried about the time until the first tweet is rendered, they’d be sending the first tweet, and the first tweet only, with the initial payload. That’s a really easy way to hit your performance target: make an arbitrary one, and arbitrarily hit it. My performance target would be time until the page render is complete, i.e. the time from the initial request to the server right up to the bit where the background is done rendering, and the page fully functional at a point where the user can achieve the action they came to the page to do. If this is a page displaying a single Tweet, then the page is fully functional when the Tweet is readable, if this is a sign-up page the page is fully functional when the user can begin to fill out the sign-up form, etc.. This will force the developers to think about what really needs to go with the initial payload, and what content can be dynamically loaded after rendering is complete.

I would then look at the fastest way to get to a fully functional page, as soon as possible. In my opinion, the way to do this is to have a basic payload, which is as small as possible but large enough to give the end-user an indication that something is happening, and then dynamically load the minimum amount of data that will fill the page content. After the page content is retrieved and rendered, that’s the time to start adding things like popouts, adverts, fancy backgrounds, etc., things that add to the user experience, but that are not needed in the initial load in 100% of cases.

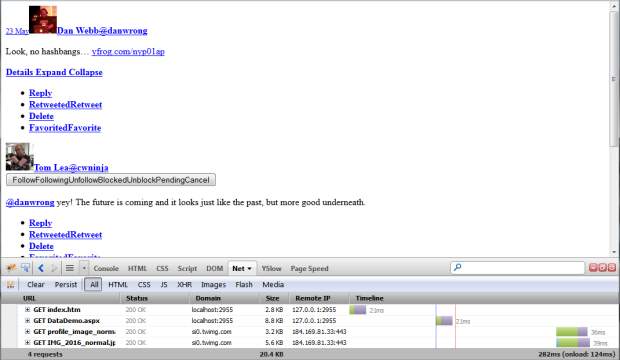

I’ve written a really simple application to test how fast I can feasibly load a small amount of data after the initial payload, and I estimate that by using the bare minimum of JavaScript (a raw XHR request, no jQuery, no imports) at the bottom of the BODY tag, I can cut parsing down to around 10ms, and return a full page in around 100ms locally (obviously the additional content loads are not counted towards this, I’d probably not load the images automatically if this were a more comprehensive PoC).

A break-down of the mockup I made, which loads the tweet content from the original linked tweet. It’s a contrived example, but it’s also a good indicator for the sort of speed you can get when you really think about optimising a page.

Add in some static assets and render time, and you’re still looking at a very fast page turnaround, as well as a very low-bandwidth cost.

The idea that Twitter will load all their JS in modules is not a completely bad one. Obviously there will be functionality that has its own JS scripts that can be loaded separately, and loading them when not needed is fairly daft. However, it really depends on numbers; with each separate JS payload you’re looking at around a few hundred milliseconds of wait time (if you’re not using a cached version of the script), so you’ll want to group the JS into as few packages as possible. If you can avoid loading packages until they’re really needed (i.e. if you have lightbox functionality, you could feasibly load the JS that drives the functionality while the lightbox is fading into view) then you can really improve the perceived performance of your site. The iPhone is a good example of this; the phone itself is not particularly performant, but by adding animations that run while the phone is loading new content (such as while switching between pages of apps on the homescreen) the perceived speed of the phone is very high, as the user doesn’t notice the delay.

The final thing I would look at is JavaScript templates. Jon Resig wrote a very small, very simple, JS template function that can work very well as a micro view engine; my testing indicates it can be parsed in around 1ms on my high-spec laptop. Sticking this inline in the initial payload, as well as a small JS template that can be used to render data returned from a web service, will allow very fast turnaround of render times. Admittedly, on browsers with slow JS parsing this will provide a worse response turnaround, but that’s where progressive enhancement comes into play. A quick browser sniff would allow a parameter pass to your service, telling it to return raw HTML instead of a JSON string, which would allow faster rendering where JS is your bottleneck.

The key thing to take from this is that if you are looking for extreme performance gains you should look to minimise the initial payload of your application. Whether you should do this via JavaScript with a JS view engine and JSON web services, or via a minified HTML initial payload, really depends on your user base, there’s never a one-size-fits-all approach in these situations.

Using JS as the main driver for your website is a fairly new approach that really shows how key JavaScript is as the language of the web. It’s an approach that requires some careful tweaking, as well as a very in-depth knowledge of JS, user experience, and performance optimisation; it’s also an approach that, when done correctly, can really drive your website performance into the stratosphere. Why a website like Twitter, with no complex logic and a vested interest in being as performant as possible, would take a step back towards the bad old days of the “the response is the full picture” is a question I did not expect to have to ask myself.